Our robots are now boasting of a new and longer neck for a raised camera position. It makes them look like giraffes, but it’s part of a set of improvements being implemented for our next demo. They also include testing a new camera with better handling of light, implementing better on-board face detection and lowering the volume of network traffic.

Our robots are now boasting of a new and longer neck for a raised camera position. It makes them look like giraffes, but it’s part of a set of improvements being implemented for our next demo. They also include testing a new camera with better handling of light, implementing better on-board face detection and lowering the volume of network traffic.

The stage has now been set for our robots to show off their capabilities so far. The robots are to map out an area which they scan for humans using search patterns. Each human detected will be identified using facial recognition. All information is instantly shared with the other robot via the CCMCatalog. Let’s see how they’ll do!

The stage has now been set for our robots to show off their capabilities so far. The robots are to map out an area which they scan for humans using search patterns. Each human detected will be identified using facial recognition. All information is instantly shared with the other robot via the CCMCatalog. Let’s see how they’ll do! Preparation of our robots is now in full swing to get them up and running for our first demo.

Preparation of our robots is now in full swing to get them up and running for our first demo. The CCMCatalog is almost done and can handle the sharing and negotiation of observations and tasks between the two robots. Face detection has gone in and the new body and legs detector is being tested now. Basic navigation is working now and we are working on the operator interface in the PsyProbe web interface.

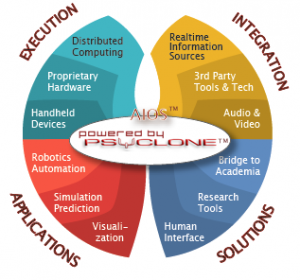

The CCMCatalog is almost done and can handle the sharing and negotiation of observations and tasks between the two robots. Face detection has gone in and the new body and legs detector is being tested now. Basic navigation is working now and we are working on the operator interface in the PsyProbe web interface. Psyclone 2.0 already supports user authored native C++ modules running in a mixed Windows/Linux cluster environment. Now we have added native Python 2.7 and 3.5 support so our users can create either inline or separate modules written entirely in Python. As part of the CoCoMaps project we are now integrating with ROS (Robot Operating System) via Python.

Psyclone 2.0 already supports user authored native C++ modules running in a mixed Windows/Linux cluster environment. Now we have added native Python 2.7 and 3.5 support so our users can create either inline or separate modules written entirely in Python. As part of the CoCoMaps project we are now integrating with ROS (Robot Operating System) via Python. Later this year we plan to release version 2.0 of the Psyclone platform. It is a complete rewrite of Psyclone 1.5 with all the old features intact and with massive performance improvements, merging of discrete and streaming data messaging, introduction of drumbeat signals for simulations and a completely new web interface, PsyProbe 2.0.

Later this year we plan to release version 2.0 of the Psyclone platform. It is a complete rewrite of Psyclone 1.5 with all the old features intact and with massive performance improvements, merging of discrete and streaming data messaging, introduction of drumbeat signals for simulations and a completely new web interface, PsyProbe 2.0. The CoCoMaps team is in Hannover demonstrating our turn-taking technology for robot-human natural conversations. You can find us in Hall 17 at the ECHORD stand C70. We are here with three other cool robotics projects and attracting a lot of attention.

The CoCoMaps team is in Hannover demonstrating our turn-taking technology for robot-human natural conversations. You can find us in Hall 17 at the ECHORD stand C70. We are here with three other cool robotics projects and attracting a lot of attention.